Hi Arthur, before you start your journey, is important to have a good test environment where we can try any endeavour we please.

As important as having an stable Test system is the possibility to recreate it on few simple steps.

This chapter of our guide will show you how to make Marvin create a Oracle RAC on Oracle Linux 7 and with 18C GRID + 18C DB (CDB + 1 PDB).

Try to be patient, and remember that Marvin will complain and wont take any enjoyment for this or any task, but I’m sure he will eventually comply

Let’s go and start exploring this new place!!

We will be using Tim Hall RAC creation process, so please make sure you first visit his guide to get the necessary software and tools in your system before we start the process:

If you want to know more about Vagrant, please visit following links:

https://oracle-base.com/articles/vm/vagrant-a-beginners-guide

https://semaphoreci.com/community/tutorials/getting-started-with-vagrant

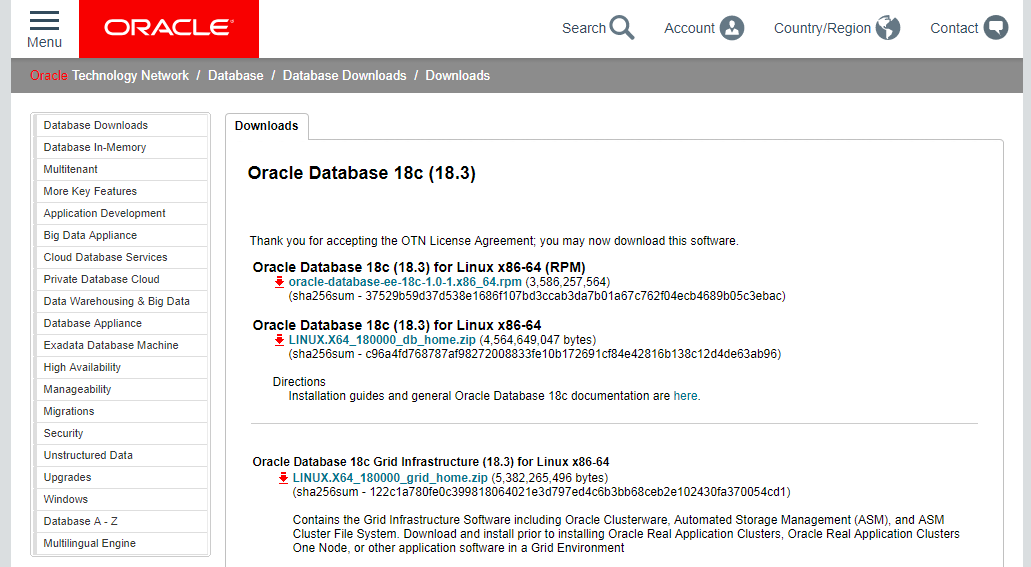

You will need to download 18C DB and Grid Software:

In our case, we are running Vagrant and VirtualBox in Linux, but you can do this in Windows or Mac, will just change a bit on which commands to use, but the general idea is the same.

System requirements are the following:

— At least 6GB of RAM (but 10GB are recommended) per each DB node (2 nodes on this installation)

— At least 1GB of RAM for the DNS node

— Around 150GB of Space on disks (80GB for default DATA diskgroup creation, but you can reduce the size of the disks if you need to)

19c Is here already

This guide was created to have 18c Tests cluster, but you can already

execute the same process to have your new 19c system running. Tim Hall

already updated his repository with the new version

https://github.com/oraclebase/vagrantThe steps are the same, you just need to download 19c software and

make sure you use the new folder "vagrant/rac/ol7_19/" instead of

"vagrant/rac/ol7_183"More info in his Blog post:

https://oracle-base.com/blog/2019/02/28/oracle-database-19c-installations-rac-data-guard-and-upgrades/

First, lets clone Tim’s Git repository (a lot of good stuff there.. not only this RAC):

arthur@marvin:~/# git clone https://github.com/oraclebase/vagrant.gitDoing this, we will get the following tree Structure into our server:

arthur@marvin:~/# cd vagrant/

arthur@marvin:~/vagrant# tree --charset=ascii

.

|-- dataguard

| |-- ol7_121

| | |-- config

| | | |-- install.env

| | | `-- vagrant.yml

| | |-- node1

| | | |-- scripts

| | | | |-- oracle_create_database.sh

| | | | |-- oracle_user_environment_setup.sh

| | | | |-- root_setup.sh

| | | | `-- setup.sh

| | | `-- Vagrantfile

[.....]

| |-- rac

| | |-- ol7_122

[....]

| | |-- ol7_183

| | | |-- config

| | | | |-- install.env

| | | | `-- vagrant.yml

| | | |-- dns

| | | | |-- scripts

| | | | | |-- root_setup.sh

| | | | | `-- setup.sh

| | | | |-- Vagrantfile

| | | | `-- vagrant.log

| | | |-- node1

| | | | |-- ol7_183_rac1_u01.vdi

| | | | |-- scripts

| | | | | |-- oracle_create_database.sh

| | | | | |-- oracle_db_software_installation.sh

| | | | | |-- oracle_grid_software_config.sh

| | | | | |-- oracle_grid_software_installation.sh

| | | | | |-- oracle_user_environment_setup.sh

| | | | | |-- root_setup.sh

| | | | | `-- setup.sh

| | | | |-- software

| | | | | `-- put_software_here.txt

| | | | |-- start

| | | | |-- Vagrantfile

| | | | `-- vagrant.log

| | | |-- node2

| | | | |-- scripts

| | | | | |-- oracle_user_environment_setup.sh

| | | | | |-- root_setup.sh

| | | | | `-- setup.sh

| | | | |-- Vagrantfile

| | | | `-- vagrant.log

| | | |-- README.md

| | | |-- shared_disks

| | | `-- shared_scripts

| | | |-- configure_chrony.sh

| | | |-- configure_hostname.sh

| | | |-- configure_hosts_base.sh

| | | |-- configure_hosts_scan.sh

| | | |-- configure_shared_disks.sh

| | | |-- install_os_packages.sh

| | | `-- prepare_u01_disk.sh

| | `-- README.md

| `-- README.md

|-- vagrant_2.2.3_x86_64.rpm

|-- vagrant.lo

`-- VideosMake sure you copy the 18C DB and Grid Software to the RAC node1:

arthur@marvin:~/vagrant# cd rac/ol7_183/node1/software/

arthur@marvin:~/vagrant/rac/ol7_183/node1/software# pwd

/home/arthur/vagrant/rac/ol7_183/node1/software

arthur@marvin:~/vagrant/rac/ol7_183/node1/software# ls -lrth

total 9.3G

-rw-r--r--. 1 arthur arthur 4.3G Jul 25 19:33 LINUX.X64_180000_db_home.zip

-rw-r--r--. 1 arthur arthur 5.1G Jul 25 19:41 LINUX.X64_180000_grid_home.zip

-rw-rw-r--. 1 arthur arthur 104 Jan 11 22:27 put_software_here.txt

arthur@marvin:~/vagrant/rac/ol7_183/node1/software#Now that we have that, we need to modifyt he RAC shared disks location. To do that, modify the file vagrant.yml inside the folder ‘rac/ol7_183/shared_disks/config/’.

In my case, I just left them in "/home/arthur/rac_shared_disks", just make sure you have enough space (each disk disk is 20GB by default, but you can just change it changing "asm_disk_size" variable in that same file)

arthur@marvin:~/vagrant/rac/ol7_183/shared_disks# cat ../config/vagrant.yml

shared:

box: bento/oracle-7.6

non_rotational: 'on'

asm_disk_1: /home/arthur/rac_shared_disks/asm_disk_1.vdi

asm_disk_2: /home/arthur/rac_shared_disks/asm_disk_2.vdi

asm_disk_3: /home/arthur/rac_shared_disks/asm_disk_3.vdi

asm_disk_4: /home/arthur/rac_shared_disks/asm_disk_4.vdi

asm_disk_size: 20

dns:

vm_name: ol7_183_dns

mem_size: 1024Ok, lets start the fun part and get the RAC created and deployed!

One of the advantages of using Vagrant, is that will check each box and install any required service or even OS if needed, so if you need Oracle Linux 7.6, will download it (if available) and will do the same with each component needed

Is important to follow the following order so everything gets completed correctly

To start the rac all we have to do is the following in this same order:

Start the DNS server.

cd dns vagrant up

Start the second node of the cluster. This must be running before you

start the first node.cd ../node2 vagrant up

Start the first node of the cluster. This will perform all of the

installations operations. Depending on the spec of the host system,

this could take a long time. On one of my servers it took about 3.5

hours to complete.cd ../node1 vagrant up

However, since the first the first deployment will take some time, I

recommend you to use the following command, so you also get a log to

check in case there is any issue:arthur@marvin:~/vagrant/rac/ol7_183/node2# nohup vagrant up &

Start first the DNS server. Notice how Vagrant will download Oracle Linux image directly, avoiding any extra steps downloading ISO images. Also, how will install any necessary packages like dnsmasq in this case:

arthur@marvin:~/vagrant/rac/ol7_183/dns# nohup vagrant up &

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Box 'bento/oracle-7.6' could not be found. Attempting to find and install...

default: Box Provider: virtualbox

default: Box Version: >= 0

==> default: Loading metadata for box 'bento/oracle-7.6'

default: URL: https://vagrantcloud.com/bento/oracle-7.6

==> default: Adding box 'bento/oracle-7.6' (v201812.27.0) for provider: virtualbox

default: Downloading: https://vagrantcloud.com/bento/boxes/oracle-7.6/versions/201812.27.0/providers/virtualbox.box

default: Download redirected to host: vagrantcloud-files-production.s3.amazonaws.com

default: Progress: 39% (Rate: 5969k/s, Estimated time remaining: 0:01:25)

[....]

default: Running: inline script

default: ******************************************************************************

default: /vagrant_config/install.env: line 62: -1: substring expression < 0

default: Prepare yum with the latest repos. Sat Jan 12 16:57:48 UTC 2019

default: ******************************************************************************

[....]

default: ******************************************************************************

default: Install dnsmasq. Sat Jan 12 16:57:50 UTC 2019

default: ******************************************************************************

default: Loaded plugins: ulninfo

default: Resolving Dependencies

default: --> Running transaction check

default: ---> Package dnsmasq.x86_64 0:2.76-7.el7 will be installed

default: --> Finished Dependency Resolution

default:

default: Dependencies Resolved

default:

default: ================================================================================

default: Package Arch Version Repository Size

default: ================================================================================

default: Installing:

default: dnsmasq x86_64 2.76-7.el7 ol7_latest 277 k

default:

default: Transaction Summary

default: ================================================================================

default: Install 1 Package

default:

default: Total download size: 277 k

default: Installed size: 586 k

default: Downloading packages:

default: Running transaction check

default: Running transaction test

default: Transaction test succeeded

default: Running transaction

default: Installing : dnsmasq-2.76-7.el7.x86_64 1/1

default:

default: Verifying : dnsmasq-2.76-7.el7.x86_64 1/1

default:

default:

default: Installed:

default: dnsmasq.x86_64 0:2.76-7.el7

default:

default: Complete!

default: Created symlink from /etc/systemd/system/multi-user.target.wants/dnsmasq.service to /usr/lib/systemd/system/dnsmasq.service.Start the node2:

-- Start the second node of the cluster. This must be running before you start the first node.

arthur@marvin:~/vagrant/rac/ol7_183/node2# nohup vagrant up &

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Checking if box 'bento/oracle-7.6' version '201812.27.0' is up to date...

==> default: Clearing any previously set forwarded ports...

==> default: Fixed port collision for 22 => 2222. Now on port 2200.

==> default: Clearing any previously set network interfaces...

==> default: Preparing network interfaces based on configuration...

default: Adapter 1: nat

default: Adapter 2: intnet

[....]And finally, lets start node1. This will perform all of the installations operations. As you can see below, it took ~3.5 hours for this first start, but that wont be the case on next reboots since all the installation tasks were completed

Node1 failure

If node1 creation and CRS/DB deployment fails for any reason (lack of

space, session disconnected during process.. etc..) is better to

destroy both nodes and start again the process starting node2 and then

node1.If not, you may face issues if you retry the process again just for

node1 (see how to destroy each component at the end of this guide)

arthur@marvin:~/vagrant/rac/ol7_183/node1# nohup vagrant up &

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Importing base box 'bento/oracle-7.6'...

^MESC[KProgress: 10%^MESC[KProgress: 30%^MESC[KProgress: 50%^MESC[KProgress: 60%^MESC[KProgress: 80%^MESC[KProgress: 90%^MESC[K==> default: Matching MAC address for NAT networking...

==> default: Checking if box 'bento/oracle-7.6' version '201812.27.0' is up to date...

==> default: Setting the name of the VM: ol7_183_rac1

==> default: Fixed port collision for 22 => 2222. Now on port 2201.

==> default: Clearing any previously set network interfaces...

==> default: Preparing network interfaces based on configuration...

default: Adapter 1: nat

default: Adapter 2: intnet

default: Adapter 3: intnet

==> default: Forwarding ports...

default: 1521 (guest) => 1521 (host) (adapter 1)

default: 5500 (guest) => 5500 (host) (adapter 1)

[....]

default: your host and reload your VM.

default:

default: Guest Additions Version: 5.2.22

default: VirtualBox Version: 6.0

==> default: Configuring and enabling network interfaces...

==> default: Mounting shared folders...

default: /vagrant => /home/arthur/vagrant/rac/ol7_183/node1

default: /vagrant_config => /home/arthur/vagrant/rac/ol7_183/config

default: /vagrant_scripts => /home/arthur/vagrant/rac/ol7_183/shared_scripts

==> default: Running provisioner: shell...

default: Running: inline script

default: ******************************************************************************

default: Prepare /u01 disk. Sat Jan 12 17:12:07 UTC 2019

default: ******************************************************************************

[....]

default: ******************************************************************************

default: Output from srvctl status database -d cdbrac Sat Jan 12 20:48:00 UTC 2019

default: ******************************************************************************

default: Instance cdbrac1 is running on node ol7-183-rac1

default: Instance cdbrac2 is running on node ol7-183-rac2

default: ******************************************************************************

default: Output from v$active_instances Sat Jan 12 20:48:04 UTC 2019

default: ******************************************************************************

default:

default: SQL*Plus: Release 18.0.0.0.0 - Production on Sat Jan 12 20:48:04 2019

default: Version 18.3.0.0.0

default:

default: Copyright (c) 1982, 2018, Oracle. All rights reserved.

default:

default: Connected to:

default: Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production

default: Version 18.3.0.0.0

default: SQL>

default:

default: INST_NAME

default: --------------------------------------------------------------------------------

default: ol7-183-rac1.localdomain:cdbrac1

default: ol7-183-rac2.localdomain:cdbrac2

default:

default: SQL>

default: Disconnected from Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production

default: Version 18.3.0.0.0Lets check the rac

If you are in the directory from where we have started the VM, you can simply use "vagrant ssh" to connect to it:

Vagrant SSH

arthur@marvin:~/vagrant/rac/ol7_183/node1# vagrant ssh

Last login: Mon Jan 14 19:48:50 2019 from 10.0.2.2

[vagrant@ol7-183-rac1 ~]$ w

19:49:02 up 11 min, 1 user, load average: 1.51, 2.02, 1.31

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

vagrant pts/0 10.0.2.2 19:49 2.00s 0.07s 0.06s w

[vagrant@ol7-183-rac1 ~]$You should now be able to see the RAC resources running (allow 5-10 minutes for CRS/DB to start after the cluster deployment is completed)

arthur@marvin:~/vagrant/rac/ol7_183/node1# vagrant ssh

Last login: Sun Jan 20 15:28:30 2019 from 10.0.2.2

[vagrant@ol7-183-rac1 ~]$ sudo su - oracle

Last login: Sun Jan 20 15:45:51 UTC 2019 on pts/0

[oracle@ol7-183-rac1 ~]$ ps -ef |grep pmon

oracle 9798 1 0 15:33 ? 00:00:00 asm_pmon_+ASM1

oracle 10777 1 0 15:33 ? 00:00:00 ora_pmon_cdbrac1

oracle 25584 25543 0 15:56 pts/1 00:00:00 grep --color=auto pmon

[oracle@ol7-183-rac1 ~]$

[oracle@ol7-183-rac1 ~]$ echo $ORACLE_SID

cdbrac1

[oracle@ol7-183-rac1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 18.0.0.0.0 - Production on Sun Jan 20 16:00:04 2019

Version 18.3.0.0.0

Copyright (c) 1982, 2018, Oracle. All rights reserved.

Connected to:

Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production

Version 18.3.0.0.0

SQL>

DB_NAME INSTANCE_NAME CDB HOST_NAME STARTUP DATABASE_ROLE OPEN_MODE STATUS

--------- -------------------- --- ------------------------------ ---------------------------------------- ---------------- -------------------- ------------

CDBRAC cdbrac1 YES ol7-183-rac1.localdomain 20-JAN-2019 15:33:51 PRIMARY READ WRITE OPEN

CDBRAC cdbrac2 YES ol7-183-rac2.localdomain 20-JAN-2019 15:33:59 PRIMARY READ WRITE OPEN

INST_ID CON_ID NAME OPEN_MODE OPEN_TIME STATUS

---------- ---------- -------------------- ---------- ---------------------------------------- ----------

1 2 PDB$SEED READ ONLY 20-JAN-19 03.35.25.561 PM +00:00 NORMAL

2 2 PDB$SEED READ ONLY 20-JAN-19 03.35.58.937 PM +00:00 NORMAL

1 3 PDB1 MOUNTED NORMAL

2 3 PDB1 MOUNTED NORMAL

NAME TOTAL_GB Available_GB REQ_MIR_FREE_GB %_USED_SAFELY

------------------------------ ------------ ------------ --------------- -------------

DATA 80 52 0 35.2803282

[oracle@ol7-183-rac1 ~]$ . oraenv

ORACLE_SID = [cdbrac1] ? +ASM1

ORACLE_HOME = [/home/oracle] ? /u01/app/18.0.0/grid

The Oracle base remains unchanged with value /u01/app/oracle

[oracle@ol7-183-rac1 ~]$

[oracle@ol7-183-rac1 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE ol7-183-rac1 STABLE

ONLINE ONLINE ol7-183-rac2 STABLE

ora.DATA.dg

ONLINE ONLINE ol7-183-rac1 STABLE

ONLINE ONLINE ol7-183-rac2 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE ol7-183-rac1 STABLE

ONLINE ONLINE ol7-183-rac2 STABLE

ora.chad

ONLINE ONLINE ol7-183-rac1 STABLE

ONLINE ONLINE ol7-183-rac2 STABLE

ora.net1.network

ONLINE ONLINE ol7-183-rac1 STABLE

ONLINE ONLINE ol7-183-rac2 STABLE

ora.ons

ONLINE ONLINE ol7-183-rac1 STABLE

ONLINE ONLINE ol7-183-rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE ol7-183-rac1 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE ol7-183-rac2 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE ol7-183-rac2 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE ol7-183-rac2 169.254.14.104 192.1

68.1.102,STABLE

ora.asm

1 ONLINE ONLINE ol7-183-rac1 Started,STABLE

2 ONLINE ONLINE ol7-183-rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.cdbrac.db

1 ONLINE ONLINE ol7-183-rac1 Open,HOME=/u01/app/o

racle/product/18.0.0

/dbhome_1,STABLE

2 ONLINE ONLINE ol7-183-rac2 Open,HOME=/u01/app/o

racle/product/18.0.0

/dbhome_1,STABLE

ora.cvu

1 ONLINE ONLINE ol7-183-rac2 STABLE

ora.mgmtdb

1 ONLINE ONLINE ol7-183-rac2 Open,STABLE

ora.ol7-183-rac1.vip

1 ONLINE ONLINE ol7-183-rac1 STABLE

ora.ol7-183-rac2.vip

1 ONLINE ONLINE ol7-183-rac2 STABLE

ora.qosmserver

1 ONLINE ONLINE ol7-183-rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE ol7-183-rac1 STABLE

ora.scan2.vip

1 ONLINE ONLINE ol7-183-rac2 STABLE

ora.scan3.vip

1 ONLINE ONLINE ol7-183-rac2 STABLE

--------------------------------------------------------------------------------

[oracle@ol7-183-rac1 ~]$If you want to check the current status of the VMs, you can use the following commands from our host marvin:

arthur@marvin:~# vagrant global-status

id name provider state directory

---------------------------------------------------------------------------

40d79c9 default virtualbox running /home/arthur/vagrant/rac/ol7_183/dns

94596a3 default virtualbox running /home/arthur/vagrant/rac/ol7_183/node2

87fd155 default virtualbox running /home/arthur/vagrant/rac/ol7_183/node1

The above shows information about all known Vagrant environments

on this machine. This data is cached and may not be completely

up-to-date (use "vagrant global-status --prune" to prune invalid

entries). To interact with any of the machines, you can go to that

directory and run Vagrant, or you can use the ID directly with

Vagrant commands from any directory. For example:

"vagrant destroy 1a2b3c4d"

arthur@marvin:~#

arthur@marvin:~# VBoxManage list runningvms

"ol7_183_dns" {43498f5d-85b4-404e-8720-caa38de6b496}

"ol7_183_rac2" {21c779b5-e2da-44af-a121-89a8c2bfc3c6}

"ol7_183_rac1" {1f5718bc-140a-4470-aa0a-4a9ddb9215d7}

arthur@marvin:~#To show you how quick the system restarts, here is the time it takes to start every element after the first time the RAC is provisioned

arthur@marvin:~/vagrant/rac/ol7_183/node2# start_rac

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Checking if box 'bento/oracle-7.6' version '201812.27.0' is up to date...

==> default: Clearing any previously set forwarded ports...

==> default: Clearing any previously set network interfaces...

==> default: Preparing network interfaces based on configuration...

default: Adapter 1: nat

default: Adapter 2: intnet

==> default: Forwarding ports...

default: 22 (guest) => 2222 (host) (adapter 1)

==> default: Running 'pre-boot' VM customizations...

==> default: Booting VM...

==> default: Waiting for machine to boot. This may take a few minutes...

default: SSH address: 127.0.0.1:2222

default: SSH username: vagrant

default: SSH auth method: private key

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

==> default: Machine booted and ready!

==> default: Checking for guest additions in VM...

default: The guest additions on this VM do not match the installed version of

default: VirtualBox! In most cases this is fine, but in rare cases it can

default: prevent things such as shared folders from working properly. If you see

default: shared folder errors, please make sure the guest additions within the

default: virtual machine match the version of VirtualBox you have installed on

default: your host and reload your VM.

default:

default: Guest Additions Version: 5.2.22

default: VirtualBox Version: 6.0

==> default: Configuring and enabling network interfaces...

==> default: Mounting shared folders...

default: /vagrant => /home/arthur/vagrant/rac/ol7_183/dns

default: /vagrant_config => /home/arthur/vagrant/rac/ol7_183/config

default: /vagrant_scripts => /home/arthur/vagrant/rac/ol7_183/shared_scripts

==> default: Machine already provisioned. Run `vagrant provision` or use the `--provision`

==> default: flag to force provisioning. Provisioners marked to run always will still run.

real 2m46.422s

user 0m33.583s

sys 0m38.177s

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Checking if box 'bento/oracle-7.6' version '201812.27.0' is up to date...

==> default: Clearing any previously set forwarded ports...

==> default: Fixed port collision for 22 => 2222. Now on port 2200.

==> default: Clearing any previously set network interfaces...

==> default: Preparing network interfaces based on configuration...

default: Adapter 1: nat

default: Adapter 2: intnet

default: Adapter 3: intnet

==> default: Forwarding ports...

default: 1521 (guest) => 1522 (host) (adapter 1)

default: 5500 (guest) => 5502 (host) (adapter 1)

default: 22 (guest) => 2200 (host) (adapter 1)

==> default: Running 'pre-boot' VM customizations...

==> default: Booting VM...

==> default: Waiting for machine to boot. This may take a few minutes...

default: SSH address: 127.0.0.1:2200

default: SSH username: vagrant

default: SSH auth method: private key

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

==> default: Machine booted and ready!

==> default: Checking for guest additions in VM...

default: The guest additions on this VM do not match the installed version of

default: VirtualBox! In most cases this is fine, but in rare cases it can

default: prevent things such as shared folders from working properly. If you see

default: shared folder errors, please make sure the guest additions within the

default: virtual machine match the version of VirtualBox you have installed on

default: your host and reload your VM.

default:

default: Guest Additions Version: 5.2.22

default: VirtualBox Version: 6.0

==> default: Configuring and enabling network interfaces...

==> default: Mounting shared folders...

default: /vagrant => /home/arthur/vagrant/rac/ol7_183/node2

default: /vagrant_config => /home/arthur/vagrant/rac/ol7_183/config

default: /vagrant_scripts => /home/arthur/vagrant/rac/ol7_183/shared_scripts

==> default: Machine already provisioned. Run `vagrant provision` or use the `--provision`

==> default: flag to force provisioning. Provisioners marked to run always will still run.

real 2m49.464s

user 0m28.160s

sys 0m31.663s

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Checking if box 'bento/oracle-7.6' version '201812.27.0' is up to date...

==> default: Clearing any previously set forwarded ports...

==> default: Fixed port collision for 22 => 2222. Now on port 2201.

==> default: Clearing any previously set network interfaces...

==> default: Preparing network interfaces based on configuration...

default: Adapter 1: nat

default: Adapter 2: intnet

default: Adapter 3: intnet

==> default: Forwarding ports...

default: 1521 (guest) => 1521 (host) (adapter 1)

default: 5500 (guest) => 5500 (host) (adapter 1)

default: 22 (guest) => 2201 (host) (adapter 1)

==> default: Running 'pre-boot' VM customizations...

==> default: Booting VM...

==> default: Waiting for machine to boot. This may take a few minutes...

default: SSH address: 127.0.0.1:2201

default: SSH username: vagrant

default: SSH auth method: private key

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

==> default: Machine booted and ready!

==> default: Checking for guest additions in VM...

default: The guest additions on this VM do not match the installed version of

default: VirtualBox! In most cases this is fine, but in rare cases it can

default: prevent things such as shared folders from working properly. If you see

default: shared folder errors, please make sure the guest additions within the

default: virtual machine match the version of VirtualBox you have installed on

default: your host and reload your VM.

default:

default: Guest Additions Version: 5.2.22

default: VirtualBox Version: 6.0

==> default: Configuring and enabling network interfaces...

==> default: Mounting shared folders...

default: /vagrant => /home/arthur/vagrant/rac/ol7_183/node1

default: /vagrant_config => /home/arthur/vagrant/rac/ol7_183/config

default: /vagrant_scripts => /home/arthur/vagrant/rac/ol7_183/shared_scripts

==> default: Machine already provisioned. Run `vagrant provision` or use the `--provision`

==> default: flag to force provisioning. Provisioners marked to run always will still run.

real 2m50.626s

user 0m27.728s

sys 0m32.275s

arthur@marvin:~/vagrant/rac/ol7_183/node1#If you don’t want to use "vagrant ssh" you can use "vagrant port" and check the different ports the VMs has open and connect using ssh directly. This also allows you to connect using a different user than vagrant

(User/Pass details are in vagrant/rac/ol7_183/config/install.env)

arthur@marvin:~/vagrant/rac/ol7_183/node1# vagrant port

The forwarded ports for the machine are listed below. Please note that

these values may differ from values configured in the Vagrantfile if the

provider supports automatic port collision detection and resolution.

22 (guest) => 2201 (host)

1521 (guest) => 1521 (host)

5500 (guest) => 5500 (host)

arthur@marvin:~/vagrant/rac/ol7_183/node1#

arthur@marvin:~/vagrant/rac/ol7_183/node1# ssh -p 2201 oracle@localhost

The authenticity of host '[localhost]:2201 ([127.0.0.1]:2201)' can't be established.

ECDSA key fingerprint is SHA256:/1BS9w7rgYxHc6uPe4YFvTX7oJcNFKjOpS8yWZRgXK8.

ECDSA key fingerprint is MD5:6d:3f:4b:82:6a:80:3c:15:3b:d4:dd:c1:42:f1:95:5f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '[localhost]:2201' (ECDSA) to the list of known hosts.

oracle@localhost's password:

Last login: Sat Jan 26 16:15:58 2019

[oracle@ol7-183-rac1 ~]$

arthur@marvin:~# scp -P2201 -pr p13390677_112040_Linux-x86-64_* oracle@localhost:/u01/Installers/

oracle@localhost's password:

p13390677_112040_Linux-x86-64_1of7.zip 11% 157MB 6.5MB/s 03:00 ETAIn case you want to delete the Cluster, instead of deleting the whole directory, you can just "destroy" each component

arthur@marvin:~/vagrant/rac/ol7_183# du -sh *

8.0K config

80K dns

22G node1

22G node2

arthur@marvin:~/vagrant/rac/ol7_183/node2# vagrant destroy -f

==> default: Destroying VM and associated drives...

arthur@marvin:~/vagrant/rac/ol7_183/node2#

arthur@marvin:~/vagrant/rac/ol7_183# du -sh *

8.0K config

80K dns

22G node1

28K node2Well, that’s it for this guide, at least for now.

I’m sure if you build one of this, you will be able to find more interesting things on it and like every good destination, we will end up coming back and add some extra nuggets on how personalize the Cluster to our taste.

Comments